Numalis' Commitment to Global AI Standardization: Building a Trustworthy Framework for Safe Adoption

Discover how Numalis is shaping the future of AI through active contributions to international standardization committees. Our work ensures the development of meaningful and applicable frameworks to build trustworthy and certifiable AI systems. We develop AI industry requirements and best practices to enable the confident deployment of beneficial AI.

Understanding the Need for Standardization in AI

To respond to the need for public trust and compliance to AI regulation, industries, institutions and academia are working together to standardize this fast-pacing field that is AI. Today, AI adoption is still facing trust issues from stakeholders. AI does not fit in the classical well established life cycle for software, and public defiance is still import concerning safety and fairness of AI system. Regulations under development propose a framework to establish trust but will not define technical requirements that only the industry can establish given the current state of the art. Standardisation is the answer to define unified, meaningful and achievable framework of requirements to answer each regulation objectives.

Standardization aims to mitigate the risks inherent in AI.

- Safety: AI algorithms have numerous applications in critical sectors where safety is paramount, such as vehicles, surveillance systems, medical diagnoses, and scoring applications. Defining appropriate safety requirements is one of the roles of standards, which will allow companies to provide the corresponding evidence of reliability.

- Bias Mitigation: AI is commonly subject to problems of bias. Bias can have several causes that can be detected at every step of the life cycle. Clear requirements across stakeholders, expressed through agreed standards, are necessary to ensure proper risk mitigation.

- Interoperability: With many specific development environments, processes, and regulations, compatibilities become difficult to manage, which can prevent deployment. International standards will allow for more streamlined production and mutual acknowledgment, facilitating industrial market access across the world.

- Ethics and Legal Issues: AI is a transformative technology for all stakeholders in society, whose expectations in terms of ethical and legal conformity can vary. Standardization aims to align industry practices with societal expectations by defining a common understanding of how to make trustworthy AI.

The European AI Act and Standardization

The European Commission has made AI regulation a reality with an EU AI Act that has been voted on and will come into force soon. This regulation is designed to allow EU citizens to benefit from AI systems with the appropriate safeguards, depending on the level of risk they pose. Some risks are unacceptable, and such systems are forbidden; for some, the risk is high, and their design and deployment are strictly defined; for the rest, less stringent requirements are applied.

To make the AI Act applicable in practice, the European Commission has called on all stakeholders to work with CEN-CENELEC, the European standardization body, to provide technical standards.

The European Commission has thus mandated CEN-CENELEC through a “Standardization Request” to provide so-called “harmonized standards,” allowing industries that apply them to have a presumption of conformity to the Act. To do this, CEN-CENELEC identifies, adapts if necessary, and adopts international standards already available or under development by other organizations such as the ISO/IEC.

Concretely, CEN-CENELEC will produce several types of standards for AI to match the AI Act requirements:

- Harmonized Standards: These standards match directly the AI Act articles and develop requirements allowing organizations to build a conformity assessment scheme.

- EN Standards: These are standards that result from internal developments or from the adoption of other committee standards (e.g., ISO/IEC, ETSI).

Numalis and ISO/IEC 24029 Suite of Standards

Arnault Ioualalen, CEO of Numalis, is the editor of all documents related to the ISO/IEC 24029 series of standards developed in Working Group 3 (AI Trustworthiness) of ISO/IEC JTC 1/SC 42. Arnault’s role is to help achieve international consensus around these documents. Arnault is also the flagbearer for these standards, participating in raising awareness among international stakeholders about them.

- Standard 24029-1: Provides an overview of the assessment of the robustness of neural networks (published in 2019).

- Standard 24029-2: Provides methodology for assessing the robustness of neural networks using formal methods (published in 2021).

- Standard 24029-3: Provides methodology for assessing the robustness of neural networks using statistical methods (still under development).

Arnault also actively contributes to the development of the harmonized standards called the “Trustworthiness Framework” in CEN-CENELEC JTC 21. This work is especially targeted at the requirements regarding the robustness of AI systems, which will heavily rely on the ISO/IEC 24029 series.

How to Comply with Requirements Defined in Standards

A question arises: how can the industry meet the requirements defined in the standards and achieve the expected level of reliability? The answer is relatively simple: you need to implement processes and tools that can help you provide evidence.

Conformity assessment is a matter of both technical documentation and organizational management. Each requirement from the standards, depending on its nature, can be addressed by a combination of both.

Since CEN-CENELEC is relying on ISO/IEC standards, complying with these will bring you closer to the AI Act conformity needed to introduce your AI system to the EU market.

Saimple: A Software Solution for AI Compliance

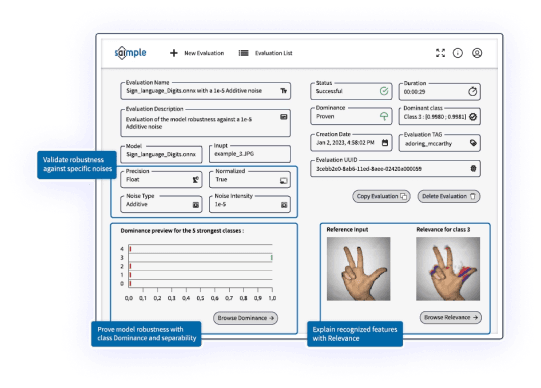

The reliability of AI is composed of many properties, two of which are AI robustness and explainability. These two aspects are directly related to the technical inner workings of AI systems and cannot be addressed solely through organizational mitigation. For these, tools and technical processes are required.

Numalis has developed Saimple, a software solution to analyze, evaluate, and document the robustness and explainability of AI algorithms throughout their lifecycle. This solution improves AI system reliability and enables compliance with standards, particularly those setting robustness requirements.

Make trustworthy AI models from the start with Saimple

Let’s Work Together

Contact us today to learn more about how Numalis can help you confidently adopt and deploy AI

Recent Publications

exhibition

healthcare

- AI and Society

- 7 min read

- News

- 1 min read